Who follows me since the years of Flash Player and Adobe Media Server knows that I’ve been busy in the last 15 years developing encoders, players and in general software architectures to enable, enhance and optimize video streaming at scale. I’ve achieved many professional successes working on innovative projects for companies like NTT Data, Sky, Intel Media, VEVO and many others. In such contexts I’ve had the opportunity to meet inspiring people: managers, engineers, colleagues and ultimately friends that helped me in growing as an engineer and as a video streaming architect.

In particular this 2021 I celebrate the 10th anniversary of Hyper, one of those achievements, but let’s start from the beginning:

Conception

In 2008 I started collaborating with Value Team, a leading system integrator in Italy (later acquired by the global innovator NTT Data). The BBC’s iPlayer was just released and media clients started asking for something similar, so NTT Data contacted me to design a high performance platform (encoder and player) for the nascent market of catch-up TVs and OTT services. The product, VTenc, powered the launch in 2009 of the first catch-up tv in Italy (La7.tv owned by Telecom Italia).

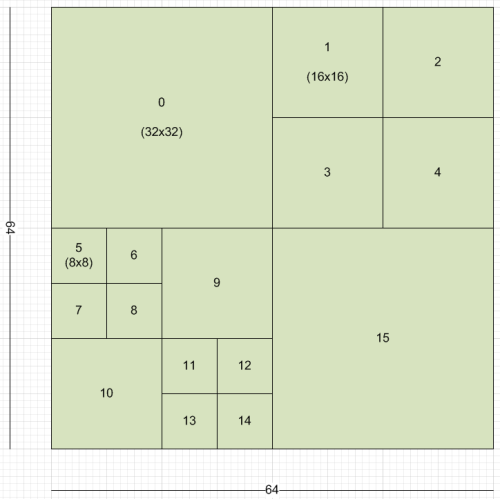

The most innovative feature of VTenc was the possibility to encode a single video in parallel, splitting it in segments then distributed on a computing grid for parallel encoding. The idea emerged after a discussion with Antony Rose and his team (the creators of BBC’s iPlayer) where they underlined that one of the main problems in encoding for a catchup tv was the long processing time that delayed the distribution of the encoded stream after the conclusion of the show on tv.

A few months and many technical challenges later, the feature was ready. And the idea of the parallel encoding was successfully applied to La7.tv: we received the live program in “parts”, emitted every time there was an advertisement slot. Each part, usually 20-25 min long, was diveded into smaller chunks, encoded in parallel and then reassembled and packetized with a map-reduce style paradigm. Also thanks to a client side playlist, the final result was ready for streaming after just 10 minutes from the conclusion of the live show.

An incredible result for that time because commercial encoders required many hours for an accurate 2-pass encoding of assets 2-3 hours long. It was also one of the first implementations in absolute for adaptive bitrate streaming in Flash (Custom implementation in Flash Player 9 + AMS when Adobe introduced officially adaptive bitrate only in Flash Player 10).

The birth of Hyper

VTenc was improved in the following years until in 2011 NTT Data signed a deal with Sky Italy to provide the encoder for the new incoming OTT services of the broadcaster.

We implemented new features and they choose VTenc for a variety of key points:

– flexible queue management system

– rapid customizability

– high video quality

– high density and scalability

– short time to market.

VTenc evolved into something more complex, NTT Data Hyper was born. The model was something different than buying a commercial encoder that usually had long evolution cycles and well defined but unflexible road maps. Hyper has been something more similar to a focused, tailor made and optimized encoding engine like those build by Netflix or Amazon. Often also a sandbox where to conceive and test new technologies and ideas.

In this 2021 we celebrate the first 10 years of Hyper.

A decade of innovative achievements and milestones

Since then we have reached many achievements and milestones facing the challenges of the last decade. Looking back, it has been an exciting journey, professionally intense and enriching. Some achievements that deserve to be mentioned:

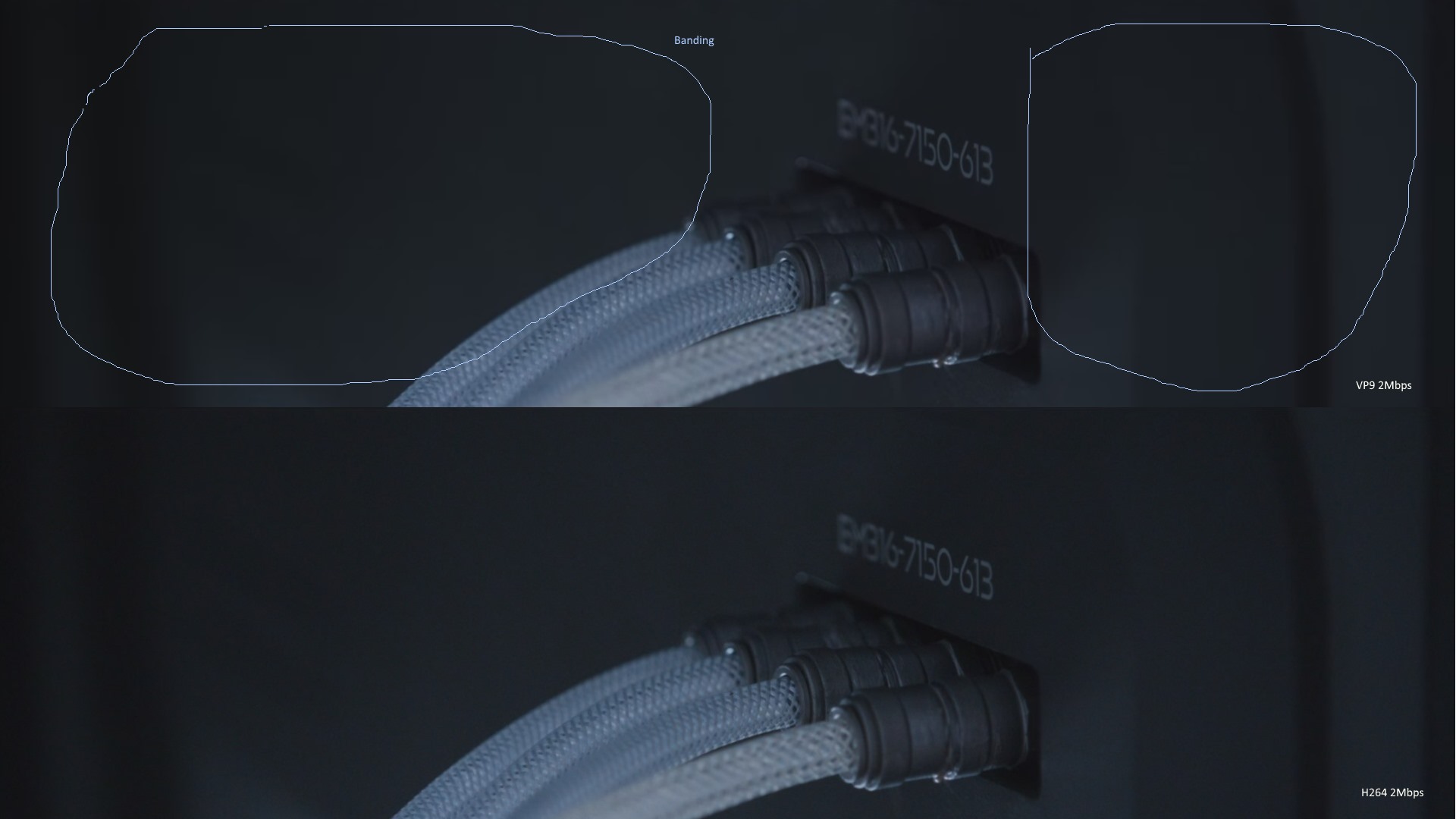

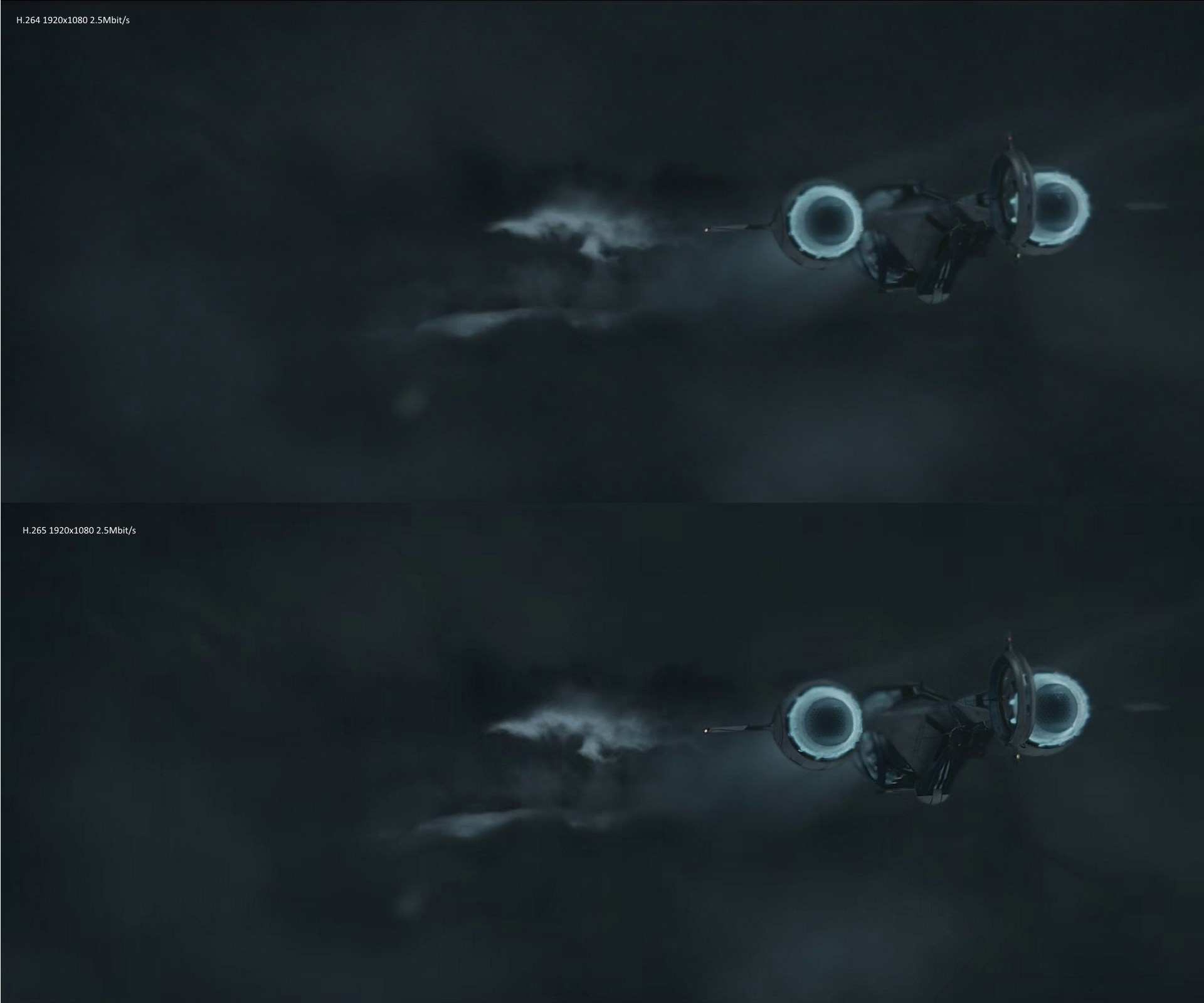

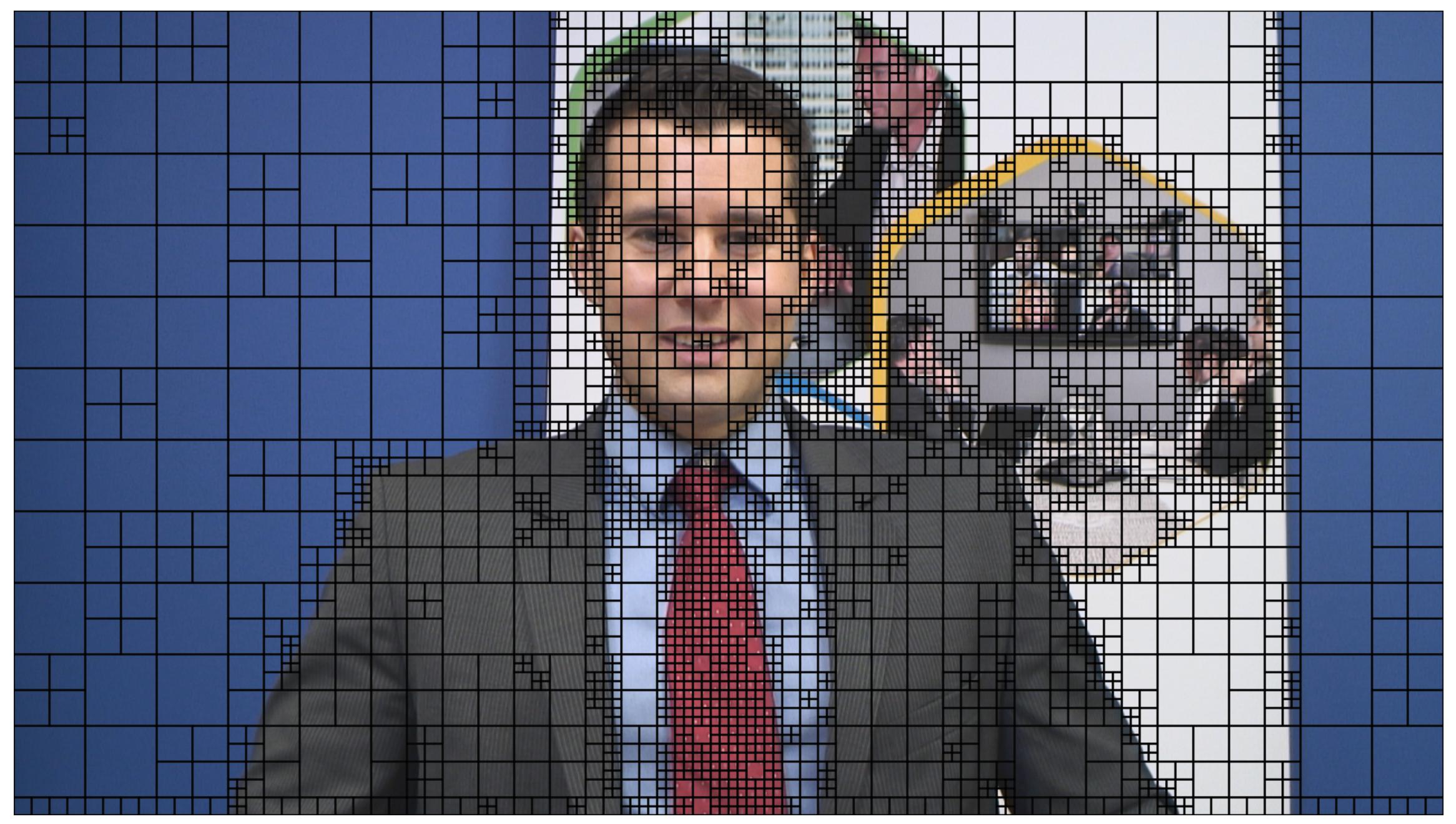

– A long story of content-aware encoding approaches from the first empirical versions (2013) to the use of ML to implement peculiar “targetMOS” and “targetDevice” encoding modes (2017+). I’ve been always a fan of “contextual optimizations” and started my experiments in the years of Flash Video, presenting some initial ideas at Adobe Max 2009-11 but then I’ve had the opportunity to implement those paradigms in the industry in various “flavors”.

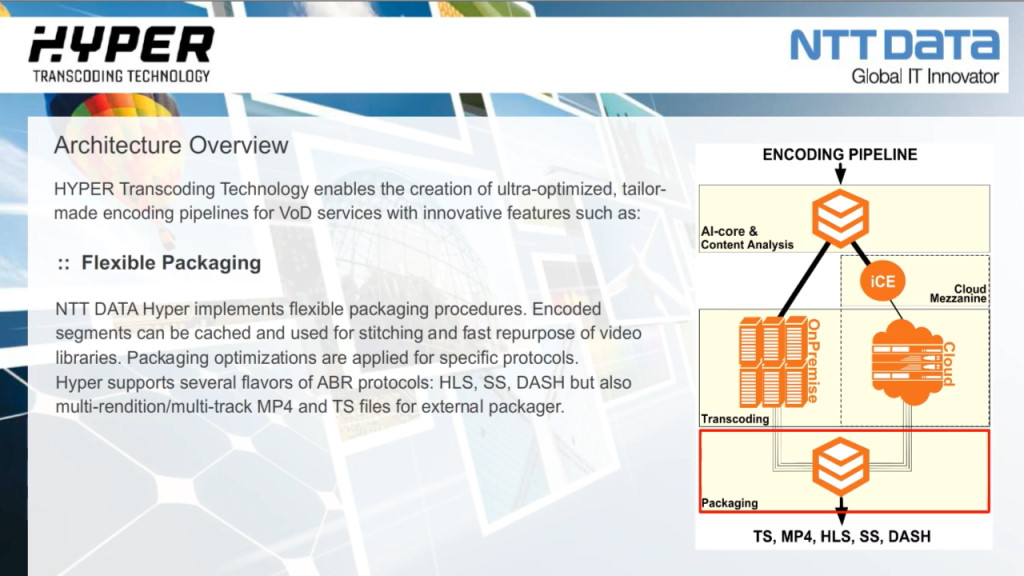

– Internal caching logic for elementary streams that allows the quick repurposing of previously encoded assets without executing a new expensive encoding. With this logic we have been able many times to repurpose libraries (tens of thousands assets) in a matter of days. In this way, add a new audio format, change ident, parental or other elements in the content playlist, or add a new packetization format (es: new version of HLS) has been always quick and inexpensive.

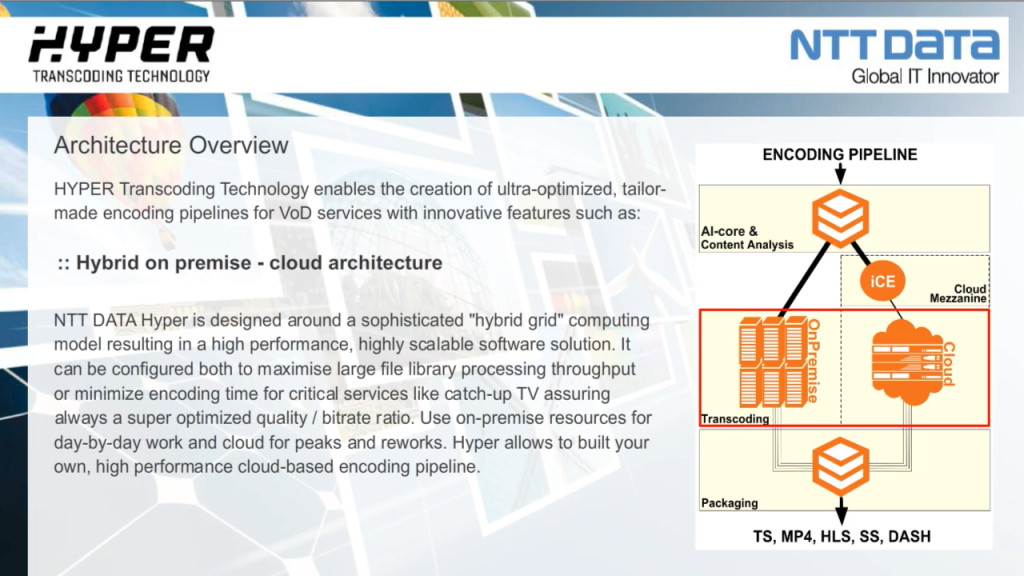

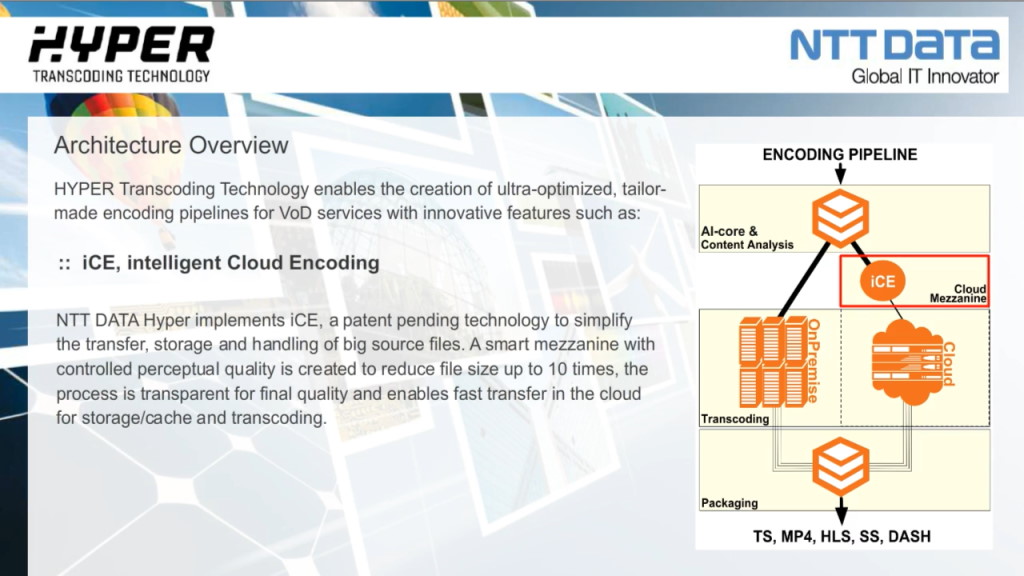

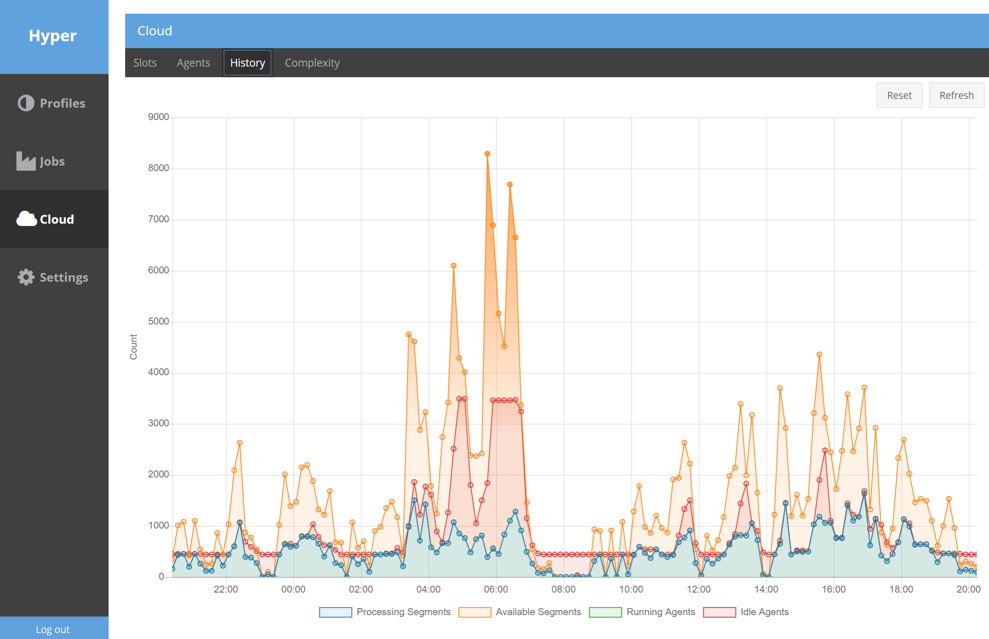

– In 2016 Hyper evolved from a grid computing to a hybrid cloud paradigm with on-prem resources that cope with baseline workload and cloud resources that satisfy peaks. Having thought the software since the beginning around agnostic services and flexible work queues, the hybridization was a natural step. Resources can be partitioned to have maximum throughput and cost efficiency on some queues as well as minimum time-to-output on others.

– In this last context my team designed a 2-step technique to generate on the fly a smart mezzanine with controlled perceptual quality to quickly and conveniently move very big high quality sources to the cloud for parallelized encoding (with a bandwidth reduction of up to an order of magnitude).

– Full cloud deployments on AWS and GCloud that quickly and dynamically scale from just 2 on-demand instances to thousands spot instances (“elastic texture”) with optimized scaling logics to minimize infrastructure costs and provide higher reactivity than standard scaling systems like autoscaling groups.

Now that Hyper turned 10 and after various millions of encoding jobs, in 2021 we are going to finalize Hyper v2 and tackle new challenges (VVC, AV1, complete refactoring, perceptual-aware delivery, agnostic architecture to apply massively parallelized processing to other contexts), but that’s the matter for an entirely new story…by now let’s celebrate:

happy 10th birthday Hyper!